About the research topic

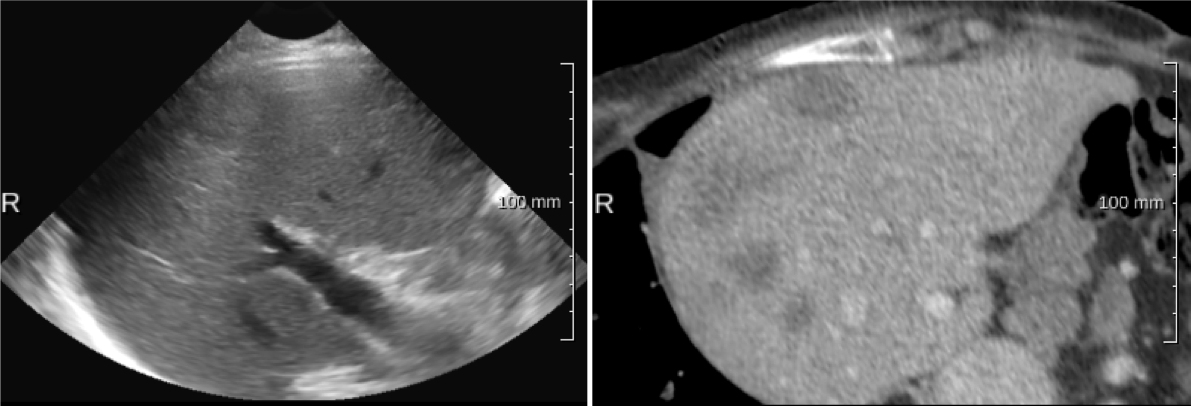

Ultrasound (US) is the preferred modality for image-guided ablation procedures in the liver, e.g., radiofrequency ablation(RFA). However, lesions are not always clearly visible in ultrasound images, whereas they are generally visible in diagnostic CT/MR images. Fusion of the CT and US images can provide the complemetary information, thereby facilitating the image guidance for RFA.

Aligning CT and ultrasound images is the main purpose of this project. To this end, we worked and have been working on the following three subjects:

- static image-based CT-US registration using a block matching based framework, and deep learning methods

- US image stitchinig, with the aim to help image-based initialization of CT-US registration

- CT-US initial alignment with compound ultrasound images

Output

Multiple-correlation similarity for block-matching based fast CT to ultrasound registration in liver interventions

J Banerjee, Y Sun et al., MedIA 2019 :

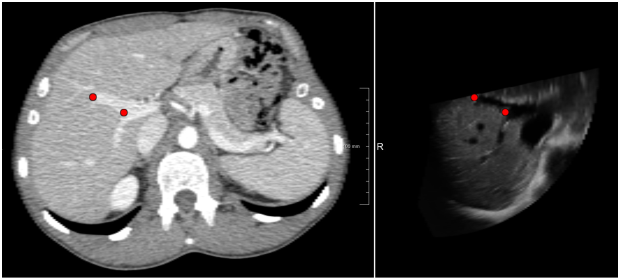

In this work we present a fast approach to perform registration of computed tomography to ultrasound volumes for image guided intervention applications. The method is based on a combination of block-matching and outlier rejection. The block-matching uses a correlation based multimodal similarity metric, where the intensity and the gradient of the computed tomography images along with the ultrasound volumes are the input images to find correspondences between blocks in the computed tomography and the ultrasound volumes. A variance and octree based feature point-set selection method is used for selecting distinct and evenly spread point locations for block-matching. Geometric consistency and smoothness criteria are imposed in an outlier rejection step to refine the block-matching results. The block-matching results after outlier rejection are used to determine the affine transformation between the computed tomography and the ultrasound volumes. Various experiments are carried out to assess the optimal performance and the influence of parameters on accuracy and computational time of the registration. A leave-one-patient-out cross-validation registration error of 3.6 mm is achieved over 29 datasets, acquired from 17 patients.

Multiple-correlation similarity for block-matching based fast CT to ultrasound registration in liver interventions

J Banerjee, Y Sun et al., MedIA 2019 :

In this work we present a fast approach to perform registration of computed tomography to ultrasound volumes for image guided intervention applications. The method is based on a combination of block-matching and outlier rejection. The block-matching uses a correlation based multimodal similarity metric, where the intensity and the gradient of the computed tomography images along with the ultrasound volumes are the input images to find correspondences between blocks in the computed tomography and the ultrasound volumes. A variance and octree based feature point-set selection method is used for selecting distinct and evenly spread point locations for block-matching. Geometric consistency and smoothness criteria are imposed in an outlier rejection step to refine the block-matching results. The block-matching results after outlier rejection are used to determine the affine transformation between the computed tomography and the ultrasound volumes. Various experiments are carried out to assess the optimal performance and the influence of parameters on accuracy and computational time of the registration. A leave-one-patient-out cross-validation registration error of 3.6 mm is achieved over 29 datasets, acquired from 17 patients.

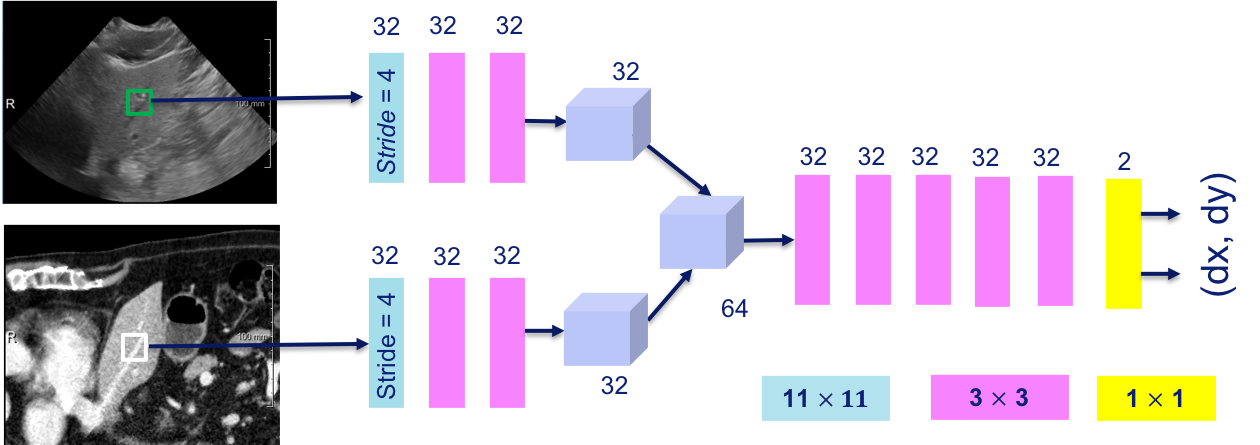

Towards Robust CT-Ultrasound Registration Using Deep Learning Methods Y Sun et al., MICCAI 2018 Workshop:

Multi-modal registration, especially CT/MR to ultrasound (US), is still a challenge, as conventional similarity metrics such as mutual information do not match the imaging characteristics of ultrasound. The main motivation for this work is to investigate whether a deep learning network can be used to directly estimate the displacement between a pair of multi-modal image patches, without explicitly performing similarity metric and optimizer, the two main components in a registration framework. The proposed DVNet is a fully convolutional neural network and is trained using a large set of artificially generated displacement vectors (DVs). The DVNet was evaluated on mono- and simulated multi-modal data, as well as real CT and US liver slices (selected from 3D volumes). The results show that the DVNet is quite robust on the single- and multi-modal (simulated) data, but does not work yet on the real CT and US images.

Towards Robust CT-Ultrasound Registration Using Deep Learning Methods Y Sun et al., MICCAI 2018 Workshop:

Multi-modal registration, especially CT/MR to ultrasound (US), is still a challenge, as conventional similarity metrics such as mutual information do not match the imaging characteristics of ultrasound. The main motivation for this work is to investigate whether a deep learning network can be used to directly estimate the displacement between a pair of multi-modal image patches, without explicitly performing similarity metric and optimizer, the two main components in a registration framework. The proposed DVNet is a fully convolutional neural network and is trained using a large set of artificially generated displacement vectors (DVs). The DVNet was evaluated on mono- and simulated multi-modal data, as well as real CT and US liver slices (selected from 3D volumes). The results show that the DVNet is quite robust on the single- and multi-modal (simulated) data, but does not work yet on the real CT and US images.

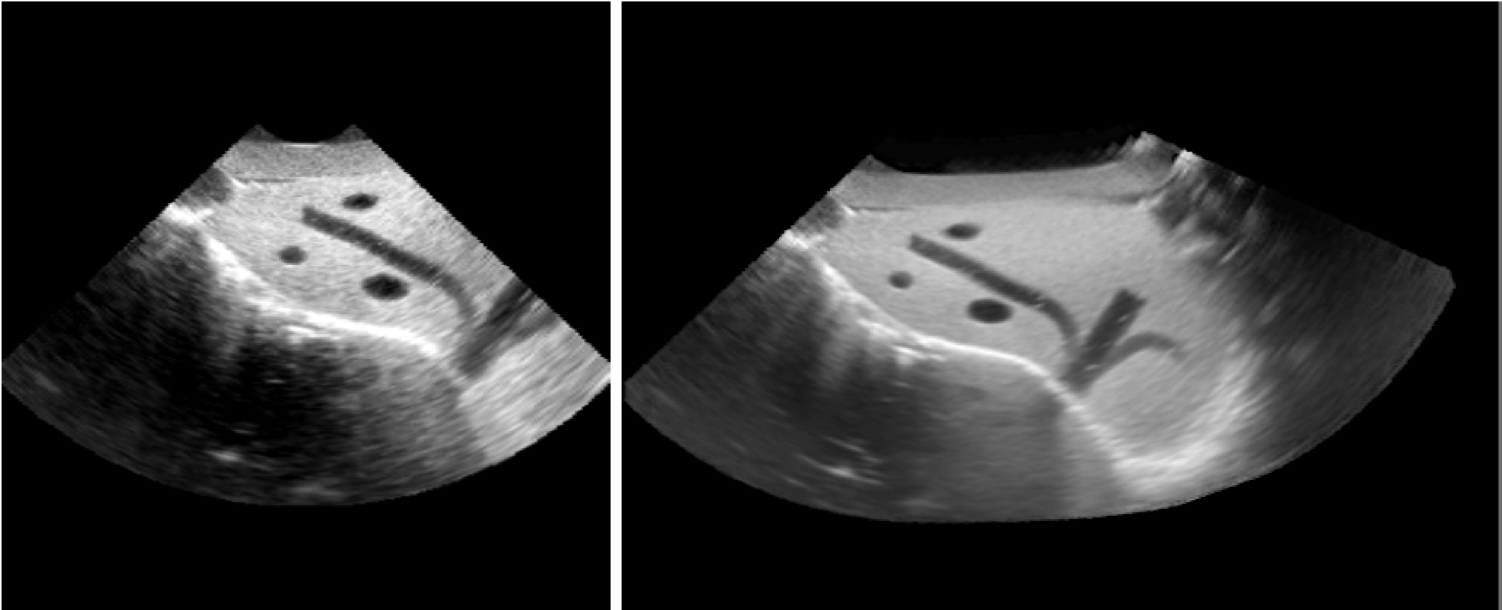

Transformation optimization and image blending for 3D liver ultrasound series stitching

Y Sun et al., MICCAI 2019 submitted:

We propose a consistent ultrasound volume stitching framework, with the intention to produce a volume with higher image quality

and extended field-of-view in this work. Directly using pair-wise registrations for stitching may lead to geometric errors. Therefore, we propose

an approach to improve the image alignment by optimizing a consistency

metric over multiple pairwise registrations. In the optimization, we utilize

transformed points to effectively compute a distance between rigid transformations. The method has been evaluated on synthetic, phantom and

clinical data. The results indicate that our transformation optimization

method is effective and our stitching framework has a good geometric

precision. Also, the compound images have been demonstrated to have

improved CNR values.

Transformation optimization and image blending for 3D liver ultrasound series stitching

Y Sun et al., MICCAI 2019 submitted:

We propose a consistent ultrasound volume stitching framework, with the intention to produce a volume with higher image quality

and extended field-of-view in this work. Directly using pair-wise registrations for stitching may lead to geometric errors. Therefore, we propose

an approach to improve the image alignment by optimizing a consistency

metric over multiple pairwise registrations. In the optimization, we utilize

transformed points to effectively compute a distance between rigid transformations. The method has been evaluated on synthetic, phantom and

clinical data. The results indicate that our transformation optimization

method is effective and our stitching framework has a good geometric

precision. Also, the compound images have been demonstrated to have

improved CNR values.